In the race to dominate artificial intelligence (AI) infrastructure, the announcements of ever-larger datacentres have become marquee events. One recent headline-maker is the facility by Microsoft in Mount Pleasant, Wisconsin, dubbed “the world’s most powerful AI datacentre.” But a closer look reveals that while the scale is indeed enormous, many of the assumptions and implications behind the project deserve a more critical inspection. This investigation will explore how this facility is constructed, what it claims to be, what it actually is, and where the gaps, trade-offs and broader questions lie.

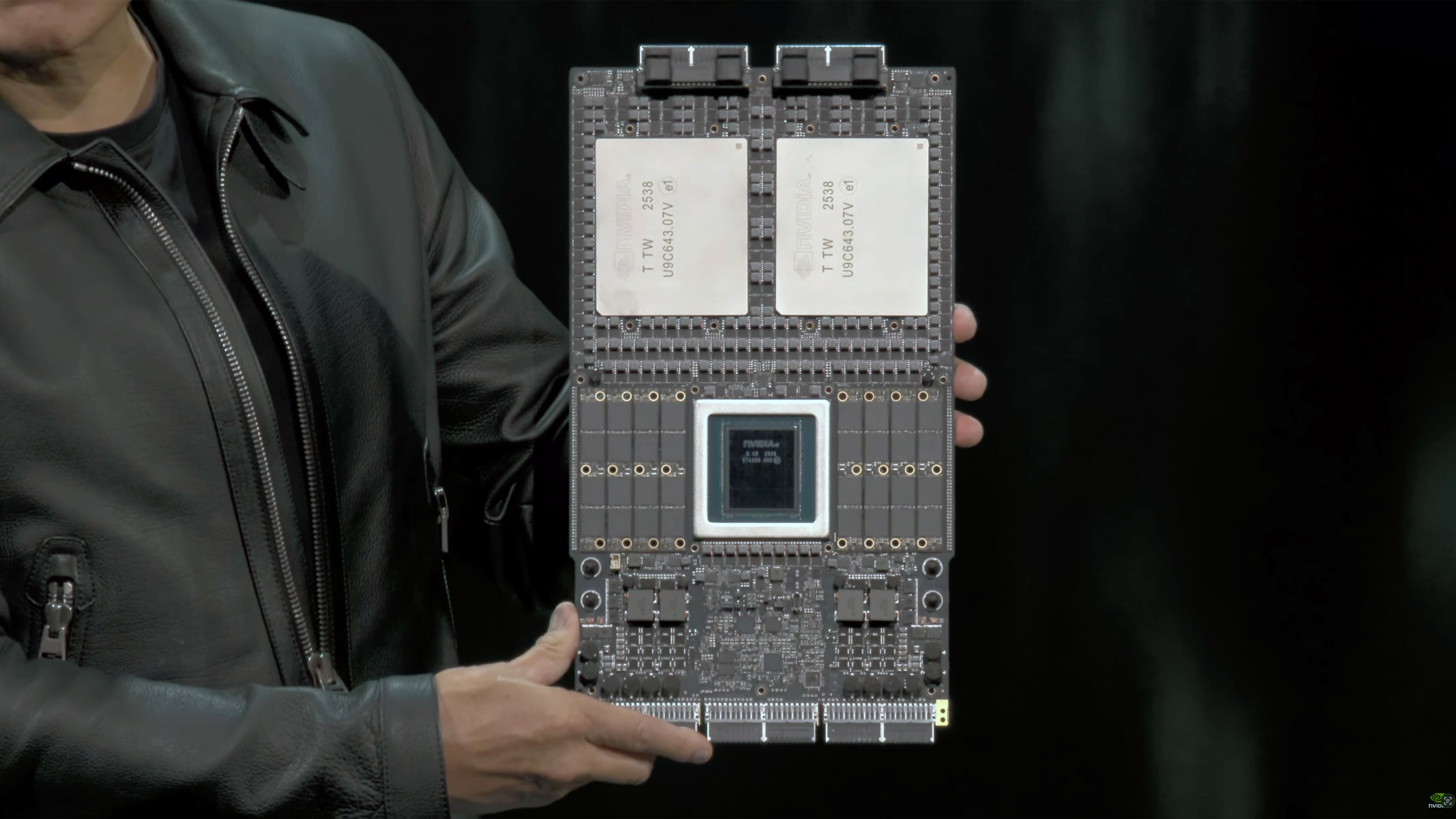

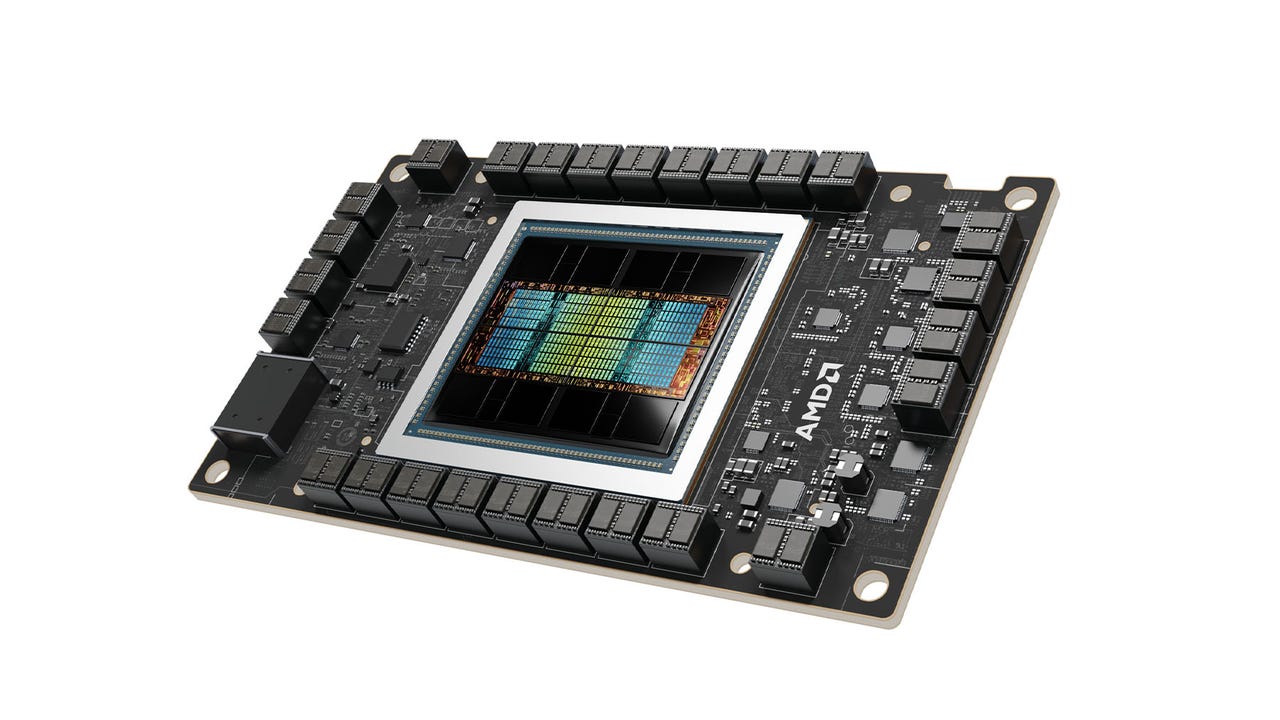

The Vision vs. Reality: What is being builtThe official story: Microsoft is investing in a mega-datacentre complex — three buildings, 1.2 million sq ft, sitting on a 315-acre site — to accelerate frontier-AI training and inference. The facility will house “hundreds of thousands” of NVIDIA GB200/GB300 GPUs, connected by fibre optic cables long enough to circle the Earth 4.5 times.

The ambition is stunning: Microsoft claims this facility will deliverten times the performance of today’s fastest supercomputer. From a hardware and infrastructure standpoint, the facility is indeed built for scale: racks with 72 Blackwell GPUs each, delivering 1.8 terabytes of GPU-to-GPU bandwidth and giving each GPU access to 14 TB of pooled memory.

But this is only one side of the story — scale does not automatically equate to operational readiness, cost-effectiveness, or sustainable utility.

Where the “Isn’t What It Seems” Starts Oversized expectations on operational utilityWhile the promotional materials emphasise massive scale and throughput, practical deployment of such a datacentre comes with significant delays, technical risks, and uncertain ROI. For example, although construction is in final phases, the go-live is scheduled for early 2026. The larger the facility, the more complex the supply chain, cooling, networking, and management issues become — particularly when cutting-edge GPUs, advanced interconnects, energy systems and custom cooling are involved.

Energy, power and environmental trade-offsThe power demand of such facilities is staggering. For instance, another project under development in South Korea aims for 3 GW of capacity by 2028, making it potentially the “largest” by capacity but raising massive energy and grid-integration questions. The Wisconsin facility similarly relies on enormous electrical and cooling infrastructure. Simply because a facility is large and “state of the art” does not guarantee the environmental footprint or energy economics are optimised. Indeed, the promotional claim of “ten times faster” must be weighed against whether the incremental benefits justify the incremental energy, capital and operational costs.

Business model and actual usage

One key question: How will the facility be used? For training the next generation of large language models (LLMs)? For serving inference workloads at scale? For internal Microsoft AI systems, or also third-party clients? While statements suggest both internal and external use, the monetisation and capacity-utilisation path is unclear. Without high utilisation, even a technically capable facility becomes an expensive asset with under-leveraged value.

Strategic framing vs sustainable designMarketing narratives emphasise being the “world’s most powerful” or “largest” facility. But “largest” depends on metrics (power capacity? floor area? number of GPUs?). A competing project in South Korea claims 3 GW capacity and $35 billion scale, which would potentially outstrip the Wisconsin facility in terms of raw power draw. Thus, claims of “world’s largest” are context-dependent.

In addition, with such a big build, risks of obsolescence or under-utilisation rise: AI hardware cycles are fast-moving, GPUs get superseded, new architectures emerge — so what seems cutting-edge at build-time may be less so a few years later.

Key Implications and Critical Questions For the AI ecosystemOn one hand, this type of investment signals that large-scale compute remains a major bottleneck for frontier AI. The facility will help reduce compute constraints and may accelerate research and deployment of more advanced models. On the other hand, concentration of compute in a few mega-facilities raises questions about diversity, accessibility and centralisation of AI infrastructure. Will smaller players be able to access comparable compute? Will the infrastructure lock-in big tech advantage?

For local communities and the gridMega-datacentres of this size can stress local power grids, water supplies (for cooling), and local environmental infrastructure. While Microsoft emphasises “closed-loop” cooling in some reports, the sheer scale of build implies that local utility and environmental impacts need more scrutiny. For nearby residents, the benefits (jobs, investment) must be weighed against potential risks (noise, heat, grid strain, new transmission lines).

Also, the question of how this facility affects global energy consumption is relevant: if one centre can consume as much as a small city, the aggregate of multiple such centres raises macro-energy concerns.

Also, the question of how this facility affects global energy consumption is relevant: if one centre can consume as much as a small city, the aggregate of multiple such centres raises macro-energy concerns.

For sustainability and efficiencyBig claims of “10× fastest” or “hundreds of thousands of GPUs” mask the fact that efficiency (compute per watt, compute per dollar) matters more than raw scale. If a smaller, more efficient cluster can deliver 80 % of the effective performance at 40 % of cost, then the large-scale centre may be less optimal. The risk: building “bigger” when “better” might have been more cost-effective and sustainable.

For competitiveness and geopolitical dynamicsLarge datacentre projects are often part of geopolitics — e.g., the South Korea project aims to position Korea as an AI infrastructure leader. Similarly, Microsoft’s facility may help the U.S. maintain a lead in AI infrastructure. But global competition means that “winning” with scale alone may not suffice; efficiency, ecosystem access, software and talent also matter.

Why It Still Matters — With Some CaveatsDespite the caveats, the significance of this facility is high. It does push the frontier of what is technically possible, signals heavy commitment to AI infrastructure, and may drive innovation by providing a substrate for large model training. The “world’s largest” label serves symbolic value: it attracts talent, funding, and press.

However, it is crucial to interpret the claims with nuance: “largest” does not automatically mean “most efficient,” “most sustainable,” or “most accessible.” For stakeholders (researchers, local communities, policy makers), the key is to ask: How will this facility be used? What is the cost per useful unit of compute? What are the grid, environmental and community trade-offs? How open is the infrastructure or does it create another island of compute power reserved for large players?

Recommendations & Considerations

Transparency of utilisation: The operators should publish utilisation data (percentage of time GPUs are active, model training vs idle time), to show the asset is not under-leveraged.

Efficiency metrics: Beyond just number of GPUs, metrics like compute per watt, cooling efficiency, cost per training hour matter.

Community and grid impact assessments: Local governments should insist on independent assessments of power draw, heat/cooling discharge, noise, transmission upgrades and water usage.

Access models: Consider whether parts of the infrastructure can be opened to third-party researchers or smaller firms, to avoid concentration of compute.

Future proofing: Given how fast hardware evolves (new chips, new architectures, specialised AI accelerators), the facility design should allow modular upgrades rather than risking being locked into a now-legacy architecture.

ConclusionThe “world’s largest AI datacentre” is indeed a machine of immense scale — a monument to the infrastructure arms race powering modern AI. But what it isn’t is a guarantee of flawless advantage, perfect efficiency, or unmitigated benefit. The headline of “largest” masks a complex web of trade-offs: power, cost, utility, community impact, access and sustainability. For all the promise, we should treat such mega-facilities with a healthy measure of skepticism and demand clarity about how they deliver value — not just how big they are.